SNLA381 March 2021

2.1 Advantages of PCIe Technology

PCIe is not new to automotive. In fact, PCIe has an extensive history as an intra-ECU, board-to-board interface between processors and automotive network interface cards (NICs) for years.

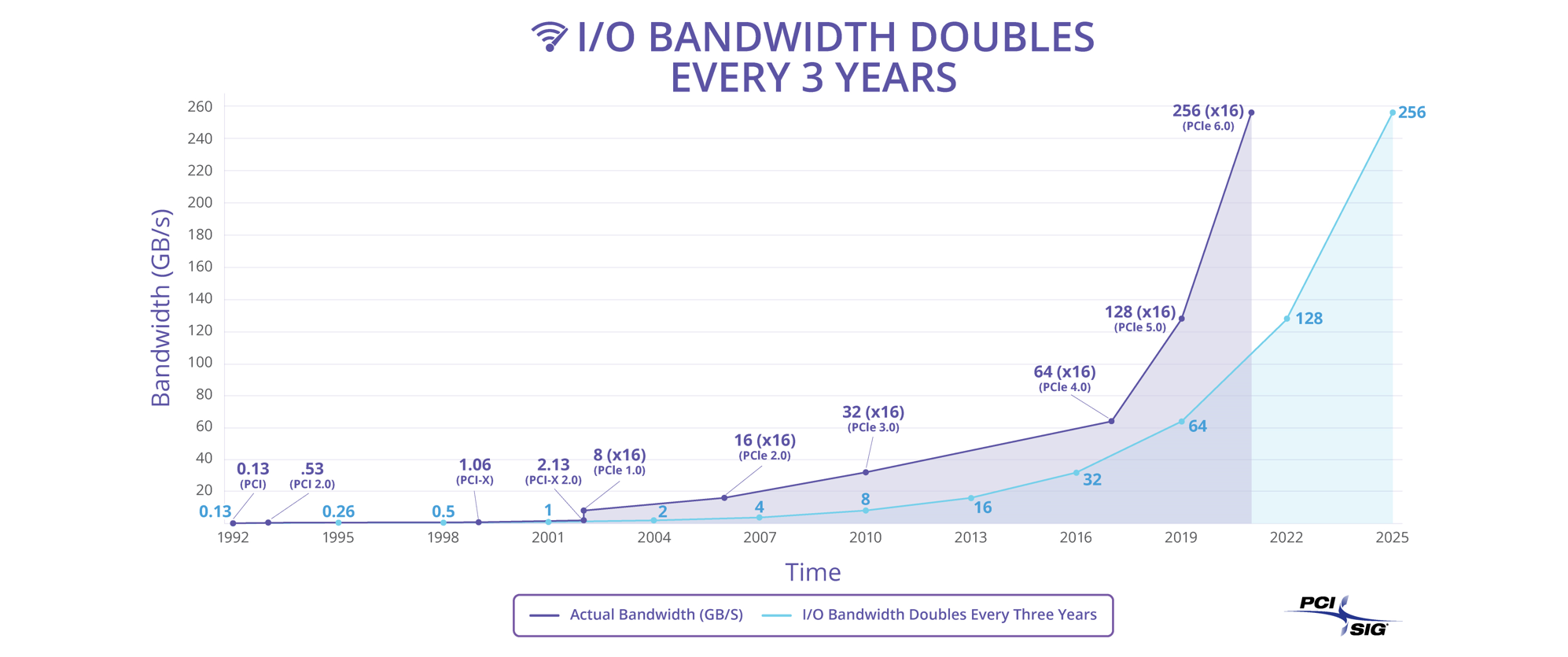

Figure 2-1 PCIe Historical Bandwidth

Support for High-Speed Interconnects (source: PCI-SIG)

Figure 2-1 PCIe Historical Bandwidth

Support for High-Speed Interconnects (source: PCI-SIG)There are several key advantages to using PCIe:

- Bandwidth Scalability: As cited by the PCI-SIG standards body, the PCIe bandwidth has doubled with each generation (for example, 8 GT/s for PCIe 3.0, 16 GT/s for PCIe 4.0, and 32 GT/s for PCIe 5.0), enabling designers to implement a future-proof interface that scales with increasing bandwidth needs. PCIe also offers flexible link widths, where parallel lanes can easily expand the bandwidth from x1 to x2, x4, x8, or x16.

- Ultra-Low Latency and Reliability: PCIe incorporates minimal data overhead and guaranteed reliable transport on the hardware level, reducing latency to the order of tens of nanoseconds. Comparatively, traditional networking technologies like Ethernet rely on software-level handling in the TCP/IP layer to manage data integrity and transport reliability, resulting in overhead that increases latency to the order of several microseconds. This order-of-magnitude difference in latency, multiplied across end-to-end automotive interconnects, creates significant challenges for automotive applications with real-time needs (for example, ADAS and V2X).

- Direct Memory Access (DMA): PCIe provides a built-in DMA method without packetization to reduce CPU processing overhead resources. This further optimizes latency for remote shared storage use cases. Whereas other interface technologies incur a cost in CPU cycles access, copy, and buffer memory data from another domain, ease of DMA implementation in PCIe allows processors to access shared memory efficiently as if the memory were locally available.

- PCIe Ecosystem Breadth: PCIe is non-proprietary and well-adopted by central processing units (CPUs), graphics processing units (GPUs), and hardware accelerators across a vast array of vendors. Its pervasiveness across the industry enables flexible interoperability with off-the-shelf components and readily available IP blocks. Moreover, various elements of the networking ecosystem are beginning to support PCIe natively, including SSD storage and PCIe-based switch fabrics with non-transparent bridging (NTB) topologies.