SSZT798 february 2018

At some time in the future, society as a whole will be relatively comfortable with the use of artificial intelligence driving them in a safe manner from place to place. Exactly when this tipping point will occur is beyond my clairvoyance; however, I would expect the intelligence portion to be much more “real” than “artificial” at that point.

In the meantime, the practical use of artificial intelligence via deep learning techniques can play a significant role in the advancement of safety systems in vehicles that are much more within the grasp and use of the typical consumer base.

Deep learning is a concept that is decades old, but is now much more relevant given specific applications, techniques and (of course) the performance available on general computing platforms. The “deep” aspect of deep learning stems from the number of hidden layers implemented between the input and output that mathematically process (filter/convolve) the data between each layer toward the eventual result. In a vision system, a deep (vs. wide) network tends to promote more generalized recognition by building layer upon layer of identified features toward the final, desired output. The advantage of these multiple layers is the learned features at various levels of abstraction.

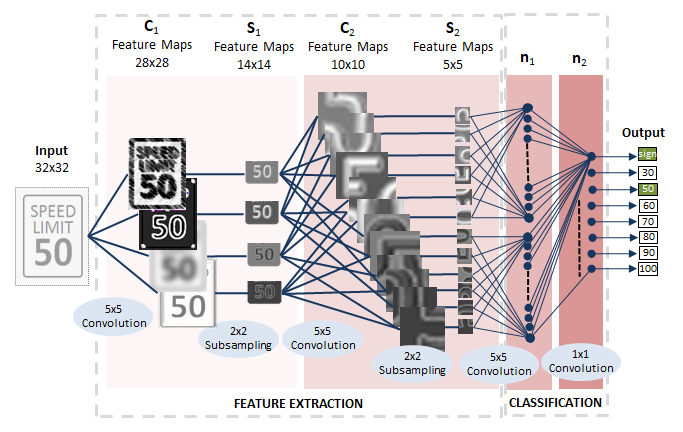

As an example, if you train a deep convolutional neural network (CNN) to classify images, the first layer learns to recognize very basic things like edges. The next layer learns to recognize collections of edges, which form shapes. The following layer learns to recognize collections of shapes like eyes or noses, and the last layer will learn even higher-order features like faces. Multiple layers are much better at generalizing because they learn all of the intermediate features between the raw data and the high-level classification. This generalization across many layers, as shown in Figure 1, is advantageous for an end-use case such as classifying traffic signs or perhaps identifying a specific face despite dark glasses, a hat and/or other types of obstruction.

Figure 1 Simplified Traffic Sign

Example

Figure 1 Simplified Traffic Sign

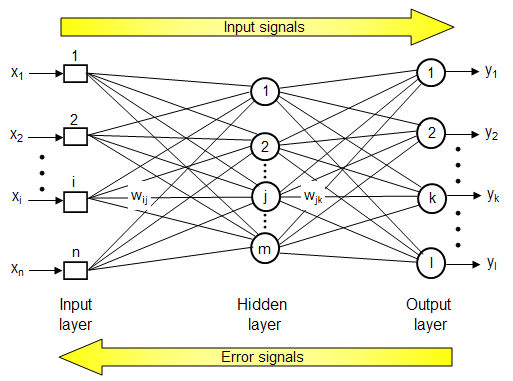

ExampleThe “learning” aspect of deep learning comes from the iterations of training (back propagation) required to teach a layered network how to produce more accurate results given massive sets of known inputs and their desired outputs (Figure 2). This learning reduces errors over those iterations and eventually hones in on the results of the layered functions to meet the overall system requirements and provide a very robust solution for the target application. This type of learning/layering/interconnecting resembles a biological nervous system and therefore supports the notion of artificial intelligence.

Figure 2 Simplified Back Propagation

Example

Figure 2 Simplified Back Propagation

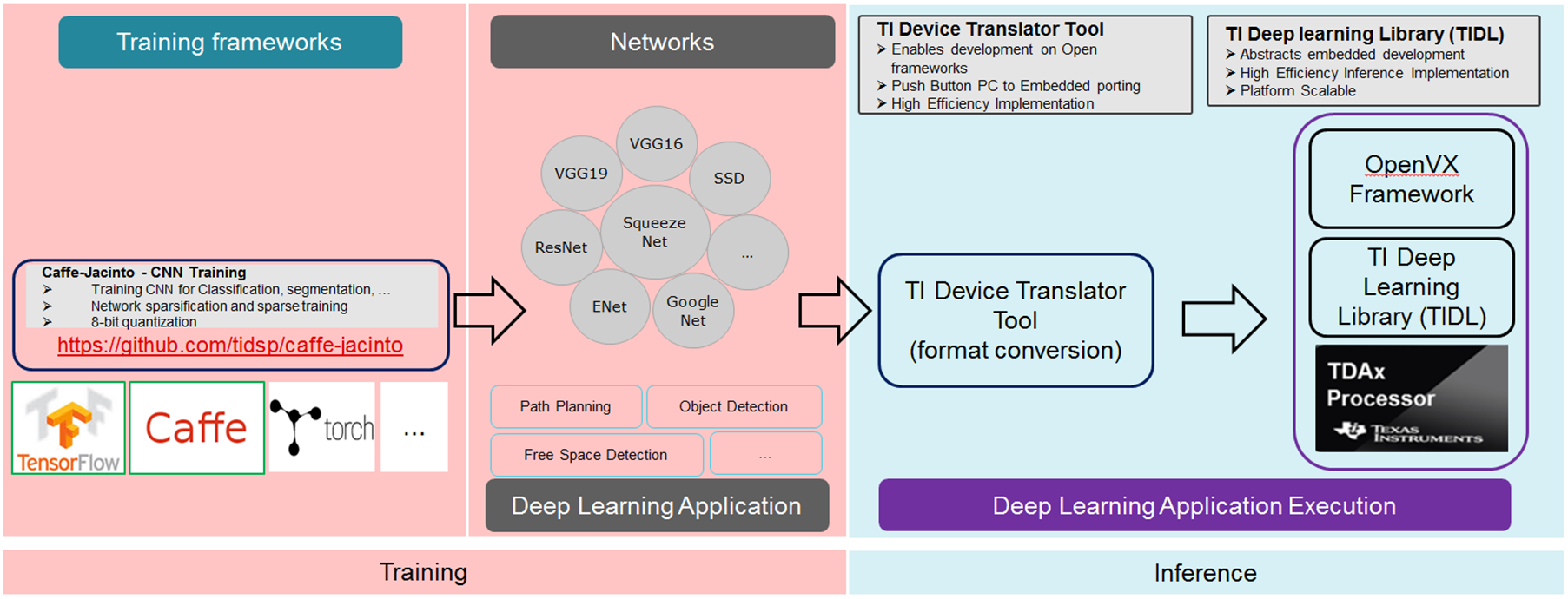

ExampleWhile the efficacy of deep learning seems here to stay, its practical application comes with some challenges. If the application is more of an embedded one sensitive to system constraints (such as overall cost, power consumption and scaled computing capabilities), the design of the system to support deep learning must take these constraints into account. Designers can use front-end tools such as Caffe (a deep learning framework originally developed at the University of California, Berkeley) or TensorFlow (the brainchild of Google) to develop the overall network, layers and corresponding functions, as well as the training and verification of the target end results. Once this is accomplished, a tool targeted for an embedded processor can translate the output of the front-end tool to software that is executable on or in that embedded device.

The TI deep learning (TIDL) framework (Figure 3) supports deep learning/CNN-based applications running on TI TDAx automotive processors to provide very compelling advanced driver assistance system (ADAS) functions on efficient, embedded platforms.

Figure 3 TIDL Framework (TI Device

Translator and Deep Learning Library)

Figure 3 TIDL Framework (TI Device

Translator and Deep Learning Library)The TIDL framework provides quick embedded development and platform abstraction for software scalability, highly optimized kernels implemented on TI hardware for accelerating CNNs, and a translator that enables network translation from open frameworks (like Caffe and TensorFlow) to embedded frameworks using TIDL application programming interfaces (APIs).

For more details about this solution, read the white paper, “TIDL: Embedded Low Power Deep Learning,” and check out the videos listed in Additional Resources.

Additional Resources

- Watch these video demonstrations of deep-learning-based semantic segmentation: