SPRACX1A April 2021 – April 2021 TDA4VM , TDA4VM-Q1

1 Introduction

Accurate ego-location within a map is an essential requirement for autonomous navigation. In the ADAS and Robotics communities this problem is referred to as the localization problem. Typically, when a vehicle or robot is outdoors, localization can be handled to some extent by the Inertial Navigation System (INS), which uses Global Positioning System (GPS) data together with measurements from an Inertial Measurement Unit, or IMU, to localize the vehicle/robot. However, an INS can only communicate with a GPS satellite when there is no obstruction between the two, that is, when there is a clear line of sight (LOS) towards the satellite. When the vehicle or robot is in a garage, warehouse, or tunnel, the accuracy of GPS location degrades substantially, because the LOS to the satellite is obstructed. Furthermore, even when GPS is available, it can only position a vehicle within approximately a 5 meter radius [1]. This error coupled with errors in the IMU result in noisy localization that may not be sufficiently accurate for high complexity ADAS or robotics tasks.

Visual localization is a popular method employed by the ADAS and robotics community to meet the stringent localization requirements for autonomous navigation. As the name implies, in visual localization, images from one or more cameras are used to localize the vehicle or robot within a map. Of course, for this task, a map of the environment needs to be constructed and saved prior to localization. In the field of localization, the more popular solutions thus far have been based on LiDAR, because LiDAR measurements are dense, and precise. However, though LiDAR based localization is highly accurate, it is cost prohibitive for the every day vehicle, because high precision LiDARs are typically in the order of thousands of dollars. Thus, it is critical that a cheaper alternative such as visual localization is made available.

In robotics and in automotive, the computations for localization as well as for other tasks, need to be performed within the vehicle or robot. Therefore, it is critical that the vehicle or robot is equipped with high-performance embedded processors that operate at low power. The Jacinto 7 family of processors by TI, was designed from the ground up with applications such as visual localization in mind. The Jacinto 7 family is in fact the culmination of two decades worth of TI experience in the automotive field, and many decades of TI experience in electronics. These processors are equipped with deep learning engines that boast one of the best power-to-performance ratios of any device in the market today, together with Hardware Accelerators (HWAs) for specific Computer Vision (CV) tasks, and also Digital Signal Processors (DSPs) that can efficiently perform related CV tasks.

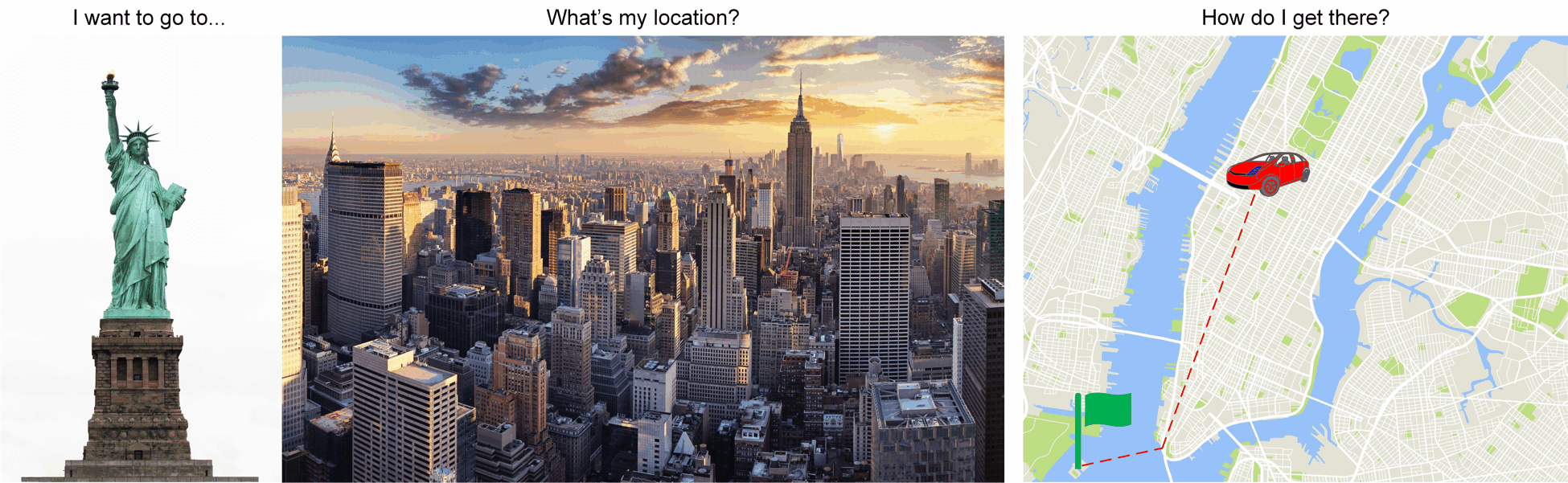

Figure 1-1 Why Localization?

Figure 1-1 Why Localization?