SPRACX1A April 2021 – April 2021 TDA4VM , TDA4VM-Q1

2.1 Key Point Extraction and Descriptor Computation

There are a variety of techniques used by the Computer Vision community to extract key-points. These techniques fall in to one of two categories – traditional Computer Vision based feature extraction methods such as SIFT, SURF [2], KAZE [3], or Deep Neural Network (DNN) based feature extractions methods.

An important advantage of DNN based key-point extraction is that the process can be performed using a generic Deep Learning accelerator. In contrast, for traditional CV based key-point extractors, one either needs to design specialized hardware accelerators (HWAs) or use general purpose processor cores. The former limits the types of features the customer can use, and the latter is prohibitively inefficient, and as a consequence DNN based key-point extraction becomes the more practical solution.

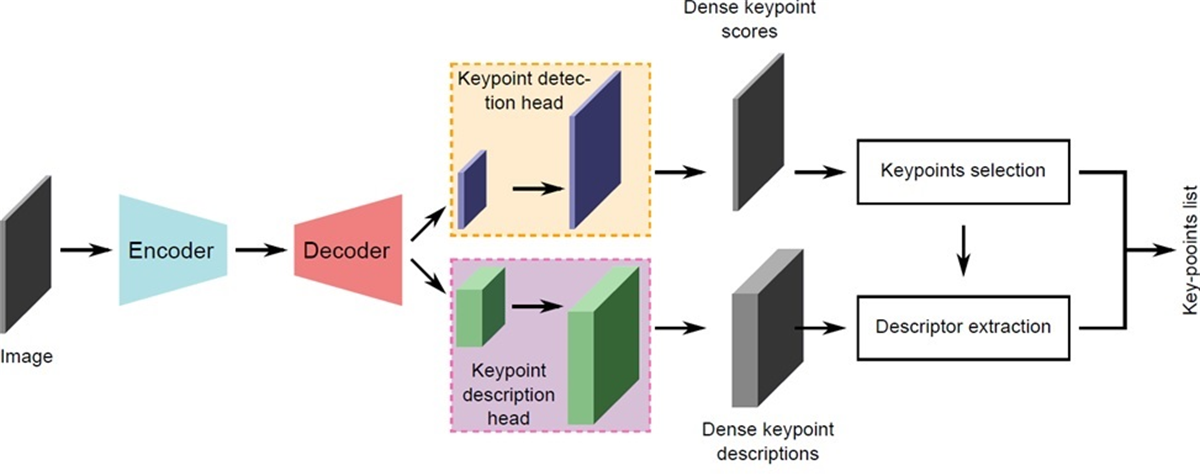

This document describes a DNN-based feature extraction method for localization. In particular, the algorithm described here learns feature descriptors similar to KAZE [3] in a supervised manner using DNNs and is therefore named DKAZE or Deep KAZE. Using the DKAZE framework, one can extract both key-points and the corresponding descriptors as shown in [3]. More details on this algorithm can be found here. Once key-points are extracted, the next step is to match the extracted features with features in the stored 3D map, to thereby estimated the pose of the vehicle/robot. The network structure of the DKAZE DNN is shown in Figure 2-2.

Figure 2-2 DKAZE Network Structure

Figure 2-2 DKAZE Network Structure