SPRADB0 may 2023 AM62A1-Q1 , AM62A3 , AM62A3-Q1 , AM62A7 , AM62A7-Q1 , AM67A , AM68A , AM69A

2.2 Labelling Images

Deep learning and neural networks are supervised machine learning algorithms. They require the data have an associated label that informs training what pertinent information is in the data. The type of label depends on the type of problem being solved. An object detection model requires coordinates (typically two 2D points, representing a bounding box) and the type of an object; there can be multiple within one image. For the retail-scanner application, this is the right choice because part of the purpose is to recognize multiple things quickly and automatically.

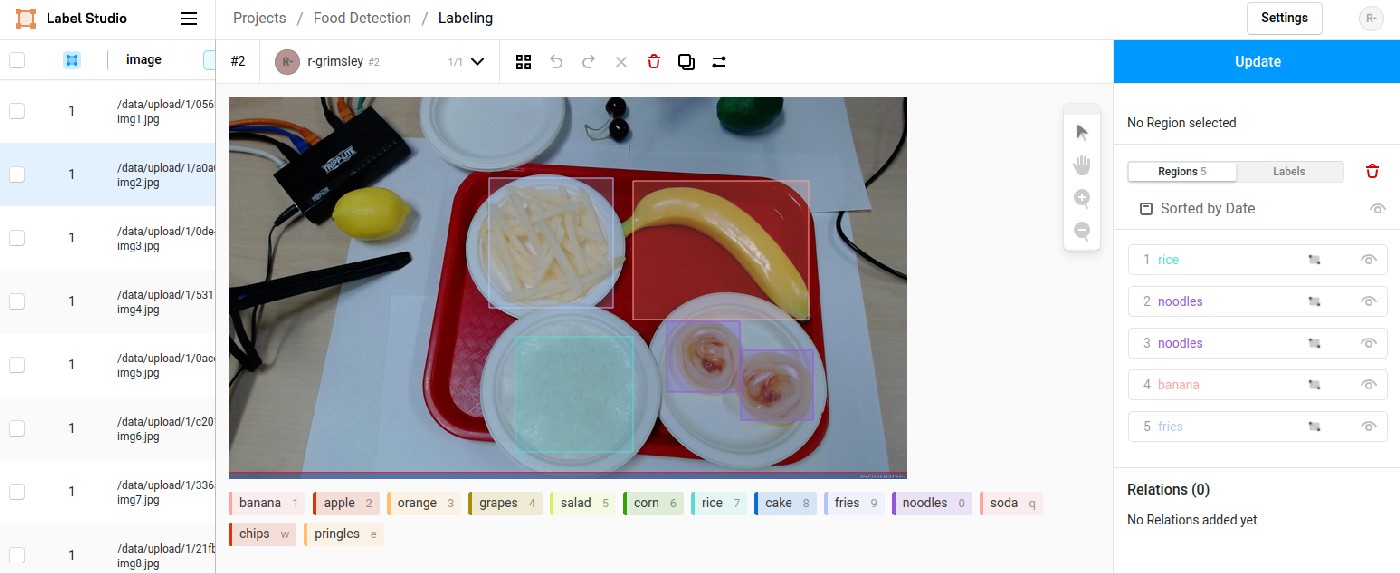

Unfortunately, labelling is generally a tedious task. If done poorly, model accuracy will suffer. Several tools exist to ease this process. TI's Edge AI Studio is an online cloud tool for labeling and most other model development tasks for TI processors; however, this was not available during the development of the retail-checkout application. An alternative tool for offline/local labeling is “label-studio” and the labeling interface is shown in Figure 2-2. This figure shows an image from the food-recognition dataset. Multiple objects are present and have colored boxes drawn onto them to indicate which class they belong to.

Figure 2-2 Label Studio interface. (Additional objects are intentionally left in the

image and unlabeled. Allowing clutter can improve robustness and

precision.)

Figure 2-2 Label Studio interface. (Additional objects are intentionally left in the

image and unlabeled. Allowing clutter can improve robustness and

precision.)Once all images are labeled, the dataset can be exported in one of several formats. The training tools from TI use COCO JSON format. For this, the output is a ZIP archive with a folder of images and a JSON entitled result.json.