SPRY344A January 2022 – March 2023 AM67 , AM67A , AM68 , AM68A , AM69 , AM69A , TDA4AEN-Q1 , TDA4AH-Q1 , TDA4AL-Q1 , TDA4AP-Q1 , TDA4APE-Q1 , TDA4VE-Q1 , TDA4VEN-Q1 , TDA4VH-Q1 , TDA4VL-Q1 , TDA4VM , TDA4VM-Q1 , TDA4VP-Q1 , TDA4VPE-Q1

Designing edge AI systems with TI vision processors

TI's vision processor portfolio was created to enable efficient, scalable AI processing in applications where size and power constraints are key design challenges

These processors, which include the AM6xA and TDA4 processor families, feature an SoC architecture that includes extensive integration for vision systems, including Arm® Cortex®-A72 or Cortex-A53 CPUs, internal memory, interfaces, and hardware accelerators that deliver from two to 32 teraoperations per second (TOPS) of AI processing for deep learning.

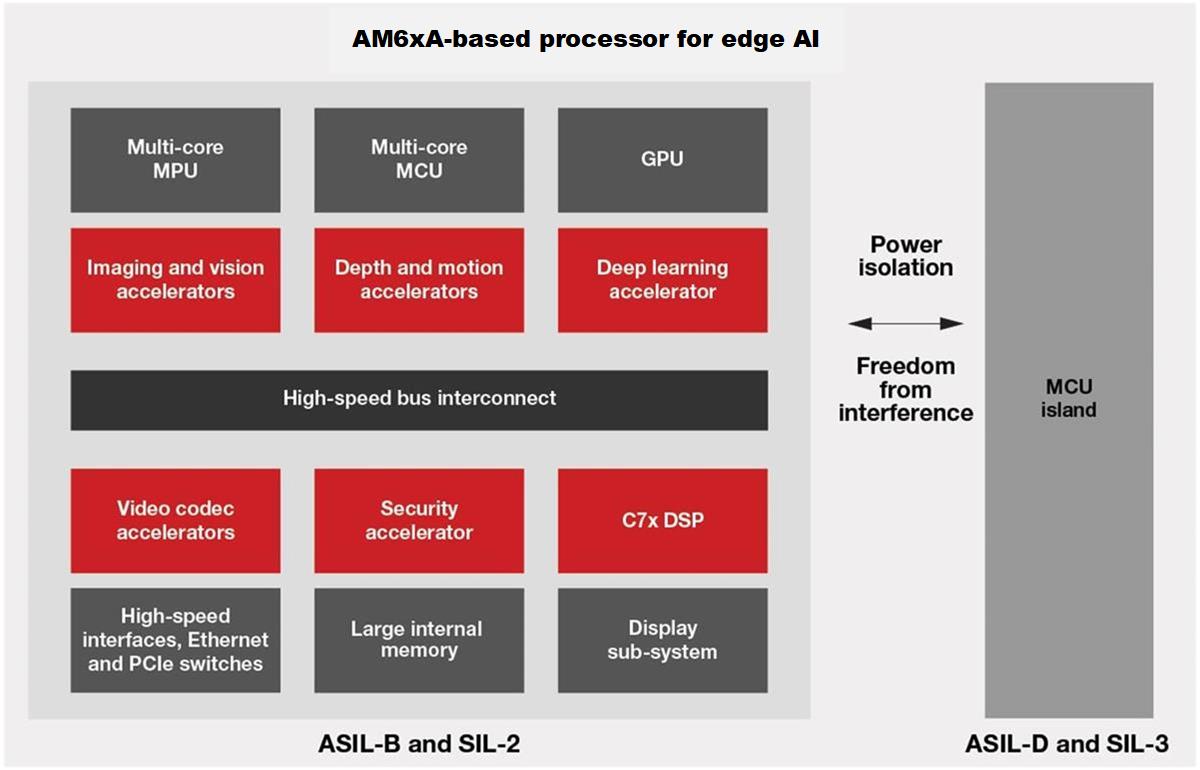

The AM6xA family uses Arm Cortex-A MPU to offload computationally intense tasks such as deep learning inference, imaging, vision, video and graphics processing to specialized hardware accelerators and programmable cores, as shown in Figure 2. Integrating advanced system components into these processors helps edge AI designers streamline system bill of materials. This portfolio of processors includes scalable processing options from AM62A processors for low-power application with one to two cameras to the AM68A (up to eight cameras) and AM69A (up to 12 cameras).

Figure 2 TI vision processor edge AI

system partitioning.

Figure 2 TI vision processor edge AI

system partitioning.